☎️ E18: The State of Silent Speech and an Emerging UX Paradigm

In Conversation with Sato Ozawa, Venture Builder in Human Augmentation at Oval Ventures on ultrasonic devices, silent speech and cultural nuances in UX

I’m Lawrence Lundy-Bryan. I do research for Lunar Ventures, a deep tech venture fund. We invest €500k-1m at pre-seed & seed turning science fiction into science reality. Get in touch lawrence@lunar.vc. I curate State of the Future, a deep tech tracker and every week I explore interesting stuff in deep tech. It’s for people who don’t take themselves too seriously. Subscribe now before it’s too late.

This newsletter is the output of my weekly research. Sometimes I’m exploring non-conventional computing and I’ll bash out analog, neuromorphic, photonic computing. etc. Then I’ll be like, man it really feels like everry semiconductor investor I speak to tells me the supply chain just won’t adapt. It’s like the body rejecting a foreign body. So off I go and explore shorter term incremental technologies like chiplets, NA-EUV, and High-Bandwidth Memory. It’s relatively structured in the sense I have a map of adjacent technologies I’m actively exploring. I have 2.5D advanced packaging, GaN devices, High Frequency Power Si Vacuum Transistors, PCM (Phase Change Memory). All great stuff, all structured nicely in my gantt chart. Interviews lined up. Claude2 all powered up and ready to go. One technology per week. You’re welcome.

But research isn’t like that. And in fact, this structuring is the best way to miss the most interesting ideas and potential investment theses (Another good way of course is to just follow VC Twitter and go to VC networking events. Sorry not sorry). This is also why I don’t believe research can be KPIed. The most interesting ideas are the ones that are sparked from writing about something else. Or noting that two interviewees have seperately mentioned the same thing in different contexts. Most of the time, these leads are dead-ends. But the learnings compound.

So here we are talking about silent speech. It’s not yet an assessment in State of the Future. After it was mentioned in my interview with Wisear, I sort of found it interesting but didn’t follow it up properly. That was until an old friend, Sato, reached out.

We met when I was helping run the Radicalxchange fellowship program a few years ago. He is a Venture Builder in Human Augmentation at Oval Ventures. He says he “is a first-time fund manager who can draw and code, 7x founder, contrarian, and Japanese father of three mini Aussies.” He is building a silent speech company using ultrasonic waves instead of the jawbone vibration.

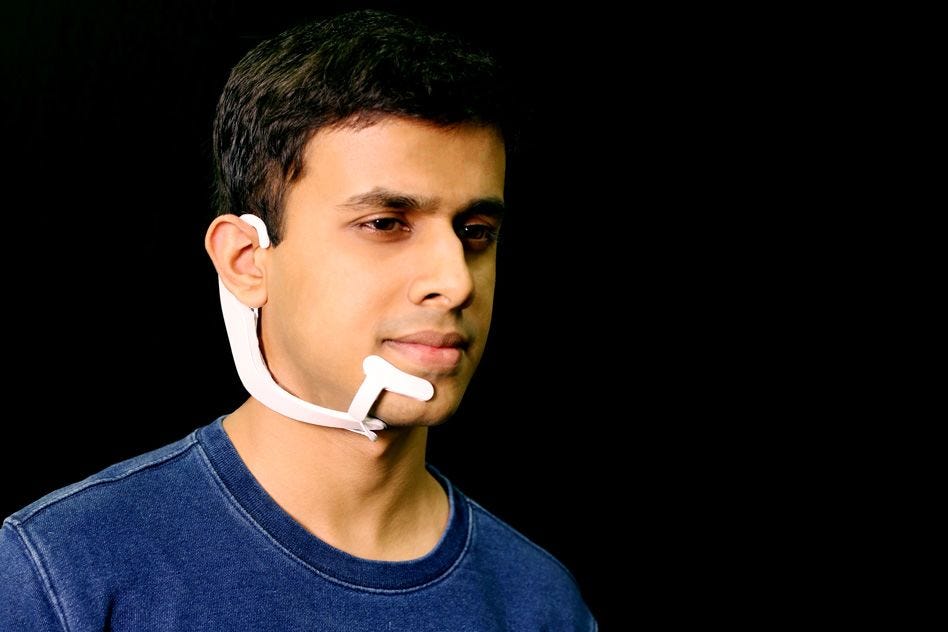

Silent speech sounds a bit weird to me. I mean look at this guy below?! But, also, what do I know? And the best ideas are the ones that sound crazy? Dead end or investment theses? You decide.

👋 Enjoy learning about this weird new UX idea. Peace, Lawrence

(p.s. if YOU are an old friend interested in something weird, get in touch. Only get in touch if it’s weird though. But *Paul* please not you. No the nation state is not going to be replaced by the cloud lol. I already lost two of the best years of my life to this nonsense)

5 Key Takeways

💬 Silent speech still just seems weird, UX tends toward more intuitive over time (away from CLI to GUI to voice/gesture/eye tracking) silent speech seems like a backward step? But maybe talking to yourself out loud in public is even weirder in Japan and Korea? Some fasncinating cultural nuances to think about about in terms of UX.

🦻If we do end up with silent speech, Sato is compelling on the benefits of ultrasound over optical methods, electromagnetic articulography (EMA), and electromyography (EMG) but I still think the winning form factor/modality is the ear if the signal capture gets good enough (a la Wisear from E15)

👋 Ultrasonic sensors are already quite common for proximity sensors and gesture recognition but I didn’t quite know you could pick them up for $1, obviously depending on depth, resolution, steering, processing capabilities, etc. At these sorts of prices we should expect all devices to have increaseinlg ultrasonic capabilities.

⌚️ An interesting line of inquiry now is CMUTs (Capacitive Micromachined Ultrasonic Transducers) that are fabricated using CMOS- compatible processes. In theory this would allow for co-integration of CMUT with other sensors like CMOS imaging sensors. Applications could include wearable medical imaging devices, skin health, health health, and a whole bunch of other interesting modalities.

👄 > 🦻Sato’s is thinking about silent speech as a discrete product and UX. Wisear is bundling silent speech with facial micro gestures for selection, electrooculography instead of eye movement for navigation, and silent speech for complex commands. Sato wants to get the signal close to the mouth trading off fashion for performance. Wisear are hoping to get good enough signals from the ears which is more fashionable but *likely* worse accuracy. This fashion versus performance trade-off is already a huge question for product designers where performance is heavily bounded by weight, size, form factor for smart glasses, hearables, wearables, etc.

Makes you think doesn’t it.

☎️ The Conversation ☎️

Lawrence: Thanks for being with me Sato, we need to get orientated, can you tell me about the idea from first principles?

The idea is silent speech using ultrasonic waves. We have an ultrasonic transducer that can emit and detect ultrasonic waves positioned here the user's mouth. You emit waves that reflect back to the transducer and then feed the pattern and intensity back to algorithms to reconstruct the waves into speech. If you do this you can’t have to vocalise speech and you address speech privacy issues, speech in noisy environments, speech impairments, etc.

Lawrence: How exactly does it differ from alternative approaches? Wisear from edition 15 are doing silent speech by bone conduction. I know others use ultrasound for visual feedback on tongue movement.

The major approach is using video to read lip movement. That is probably the most feasible way to implement this idea. But that approach is limited to data you can get from images. We can't capture any accent or intonation or volume. For tonal languages like Chinese it’s key where one sound has four different accents. So, the image-based approach can be used to make a voice assistant recognise a limited number of commands. Still, it isn't easy to use it for human-to-human conversations. Nevertheless, we are also considering a multimodal implementation for production, and image-based could be one of the promising auxiliary modalities. Our approach uses ultrasonic waves so you capture much more information. You get mouth shape, tongue shape, vocal tract shape, and airflow from the lung, it’s much richer. We capture the combination of those elements in the ultrasonic microphone, and then we put those data into the deep neural network to generate a realistic voice.

Lawrence: Where is the ultrasonic transducer placed? Sounds like it would have to be close to or in the mouth?

We’re thinking about a couple of different approaches with our partner a major electronics manufacturer. The most likely approach is using the small tube-shaped injector, which looks like a kind of straw. We would then place it right next to the mouth, obviously we need to figure out the exact positioning to emit and detect high quality signals. Another approach if people actually can accept a more uncomfortable way is to put the mouthpiece into the mouth and then put the transducer onto the tongue. This would only really work for some medical applications like laryngectomy.

Lawrence: The trade-off you are making is wearability. I'm imagining a world in which a user is more comfortable with a small electrode on their neck versus something touching their lips. Even if you're technically superior, the wearability factor is a major issue.

It is definitely a barrier for most people. People don't want anything near or in their mouths. But there is another reason we chose this form factor. Our approach is bimodal, and the whistle analogy is the best way to get another essential piece of data.

It will be immediately apparent that our idea is from the ultrasonic vocalisation of animals like whales. Still, there is another source of inspiration. Which is the whistle language of the hill tribes. I learned a lot from the Turkish and Spanish whistle languages. Instead of using loud voices to communicate with people in the mountains, they use whistles. They can hold a conversation without using their vocal cords. From this, if we could externalise the vocal cords, we could physically muff it right after capturing.

Moreover, other analogies are familiar to this form factor. Solutions for quitting smoking include wearing a small straw around the neck as a necklace and using a whistle instead of lighting a cigarette. I have also seen products of tiny whistles for meditation. So it's not that people completely abhor a small new whistle device; it's just that there needs to be enough value.

Lawrence: Silent speech just sounds so weird. So I’m movingly lips but nothing is coming out, is that right? So I mouth words and then you turn that into text?

Yes you do mouth the words but we don’t do silent speech-to-text. The use case is silent-speech-to-voice. Obviously once you have the dataset we can convert it to text or speech, it doesn’t really matter, the key is collecting the dataset and training the model. The ideal outcome would be converting it to a real authentic voice.

Lawrence: I assume it’s very easy to train the voice model to replicate the users own voice, or maybe even a celebrity voice. That’s an interesting future. I “speak” in the voice of a famous actor. There’s another revenue stream for celebrities. Anyway, I’m still not entirely sure why the ultrasound approach is the winning approach. I understand why it’s better than lip reading, but why not electromagnetic articulography (EMA) or electromyography (EMG)?

We’ve optimised for ease of use and generalisability really. EMA get’s very high resolution but you need to put sensors on the tongue and in the mouth. That won’t work for a consumer device. EMG again needs electrodes on the skin, and then you have to worry about placement and calibration. It’s not viable. And as mentioned optical methods aren’t detailed enough and won’t work for many accents. So we are balancing accuracy, portability and adaptability really. We think this is the only approach that scales.

Lawrence: Is there something cultural or social about the way you're approaching this problem that is different from others in Europe or the US?

It is totally different in Japan, Taiwan and Korea. I think it’s the best first market because people don’ttalk loud in public spaces or can't make any phone calls in the train or like people not really comfortable to do zoom calls in a cafe. It’s very cultural. People don’t like to be overheard. They use very small voice when people are around. The speech privacy issue is more painful in Japanese culture. In 10 years maybe we can move to brain-computer interfaces and solve the issue totally but for the next 10 years we have a problem to be solved.

Lawrence: What’s changed on the demand-side to make silent speech viable? Siri has been around for years and there doesn’t appear to have been huge demand for silent speech.

Well there has always been latent demand, it’s just silent speech hasn’t been good enough until now actually to solve the problem. It might be a Western bias. In Australia, people walk around and talk to their devices and it’s not a big problem. But in Japan it’s a problem. Japanese people hesitate to using Siri in the public space. So I never see any people using the city in the public space. So, um, if that pain was solved by a silent speech interface, more Japanese people start using the system. In fact, I have some research from questionnaires and surveys that suggest a lot of people wanted to use Siri but feel uncomfortable. So I think demand is there if we have the right solution.

Lawrence: So the supply-side then, what advancements now make ultrasonic silent speech viable? Is it on the miniturisation of the transducer? Is it deep neural networks.

On the hardware side yes, miniaturisation has been key. We are working with a major electronics manufacturer and we have all the right parts, we just need to fit them into a small form factor. The transducer is already small enough and now that expensive, maybe a dollar or less. Then you just connect it to a waveform generator. None of this is about advanced materials. The leap forward is probably on the analysis and speech reconstruction side. And that has been supercharged over the past 12 months or so we large language models and especially open-source models which make the reconstruction accurate and relatively cheap. Obviously, the hard work will be in collecting relevant data to fine-tune out models, but it’s not a huge science or engineering problem now.

Lawrence: If this is mainly an LLM-enabled solution, is there a race to commercialisation? Which approach will get to market first?

In terms of R&D, we are one step behind image-based silent speech. But as I said, this is the wrong approach medium-term. We think we have a scalable approach and once we start collecting data then I can’t see any other approach competing. So even if the image-based approach gets to market, the data just won’t be good enough for them to serve many Asian languages or understand tone, accent and emotion. It just won’t be good enough.

Lawrence: What are your first use cases? I assume at first the product is going to be relatively inaccurate because you have less data, so it’s hard for me to imagine you can go straight for silent-speech interface to ChatGPT for example.

We need to accumulate a lot of data before making it usable. We won’t be able to go out unless it is highly accurate. It’s not something users will just persist with unless it just works. But that’s not as hard as you might think. One big difference from the other approach is our approach is just converting sound wave to sound wave, so we know we don't have to use any language processing in between. So even if the conversion quality is quite low, people will be able to communicate easily. It might be like a zoom conversation type of experience I think as the first implementation. Not perfect, but still usable and understandable. But sound quality is not perfect. Maybe at first it won’t sound exactly like not a real voice, but even as a first working prototype, people can talk each other with silent speech.

Lawrence: If we think of silent speech is a differentiated voice interface, do you imagine it would be eventually a accessibility feature for a voice interface? So rather than a separate product, it would be something you could turn on in with your voice assistant, for example, so that it would be a choice for some people in some countries. Or do you imagine that everybody will eventually use silent speak?

The first product will be a consumer electronics product. But eventually the technology will be more ubiquitous and adopted by all consumer devices with a microphone. So it adds functionality to all devices. But we have to ship a consumer product to demonstrate the technology first, even if eventually it becomes a pure software play.

Lawrence: I imagine a toggle feature in Apple AirPods, like transparency mode, but silent speech mode. But, the challenge is that you would need to attach a straw. This is why I liked Wisear so much, because they say, we work with your existing headphones. And that is just a much more plausible adoption path.

The first product I'm thinking is a small tube shape microphone. And probably people wear like a necklace. Then when wanted to use the silent speech, just pick up the straw from the neck and then put the tip into the mouth. So it’s not that difficult. People wear smartwatches and smart rings. A smart necklace doesn’t seem like a huge leap, although yes, I agree we would eventually want to shrink it and shrink it. Eventually I would hope, with a highly trained model we might not need to collect as much data per session so to speak, so we might be able to combine with other datasets from earphones to make the device smaller and smaller.

Sato: Let me ask you the question, based on your research, how do you think about potential use cases for silent speech? How can we demonstrate utility?

You need to do a proper use case exploration exercise, but off the top of my head, I think you need to find use cases where privacy is important so likely public spaces and where voice is the only UX that makes sense. Cases where people don’t just fall back to text. I think voice is better for text for high bandwidth, low latency conversations. Complex, sensitive and urgent communication basically. Voice calls or voice notes in public spaces like public transport is the obvious go-to. But actually it might be better to think of conversations with your AI assistant like Gemini, Siri, etc. If I picture a world of Her with everyone talking all day to their personal assistant, then silent speech rather than vocalised speech starts to make more sense. Interestingly maybe then if we think of AI assistants as a new computing platform, then silent speech could be the a new UX? It’s not out of the realm of possibility.

But more mundane utility may come from the datasets you generate. I imagine there is an awful lot you can do with datasets about tongue movements, vocal intonations, airflow, etc. This would be extremely useful for speech and language therapy and speech impairments. In fact, you might find the healthcare applications are the best first customers because obviously the pain and potential benefits are that much stronger.

For more on Sato and Oval Ventures, connect with him on Linkedin and visit oval.ventures.