Understanding Privacy Enhancing Technology (Feat. Karim Eldefrawy, Confidencial.io)

Why privacy-enhancing technologies like MPC, ZKP, and FHE struggle for adoption; why selective encryption is important, and and why the Era of "Shift-Up" in data security is coming

I’m Lawrence Lundy-Bryan. I do research for Lunar Ventures, a deep tech venture fund. We invest €500k-€1m at pre-seed & seed to turn science fiction into science reality. Get in touch at lawrence@lunar.vc. I curate State of the Future, a deep tech tracker and every week; I explore interesting stuff in deep tech. It’s for people who don’t take themselves too seriously. Subscribe now before it’s too late.

State of the Future is framed around technologies. Trusted Execution Environments. Nanomechanical Computing. NFTs. etc. But technologies don’t solve problems. Solutions do.

So off the bat, the framing is off. The solution is to structure the exploration phase around problem statements, e.g. remove one billion tonnes of carbon dioxide from the atmosphere annually. Or protect the integrity and confidentiality of data during processing. This gives better answers than exploring seagrass carbon removal or zero-knowledge proofs. We partially address it during the novelty phase of research, where we compare a technology versus alternatives. And partially in the impact section, where we explore the convergence of technologies.

In the Trusted Execution Environments assessments for example one of the opportunities was “collaborative computing applications that combine TEEs with other PETs for revenue-generating, not security/cost-saving activities.” This is true of most of the assessments. The opportunities are at the intersections. This is why I am skeptical of domain-specific research or venture funds for that matter.

So this week I spoke to Karim Eldefrawy, CTO and Co-Founder Confidencial, a startup spun out of SRI International, where the origin of Confidencial’s data security technology was developed in DARPA programs (Brandeis and RACE) so if you want to know about the most cutting-edge security technology then who better to speak to.

Karim says something more interesting than “here is how we combine complex cryptography to create the most cutting edge security solution”. He says: we had a novel technology based around attribute-based encryption (ABE). (A generalisation of public-key encryption that enables fine-grained access control of encrypted data using authorisation policies) Great, I say, let’s fire up State of the Future; I’ll add attribute-based encryption and Let Us Face The Future Together. Hold on, says Karim. He says that he spoke to customers, and they didn’t want ABE. They said they wanted standard encryption. In fact they literally couldn’t use non standard encryption even if it was better…

Interesting, I say. Let’s talk.

📑 Five Things I Learned

Shift-Up: Application-level (in the TCP/IP or OSI stack layers) security matters a lot, not only network, infrastructure, or server-level security. Karim and I will attempt to coin the term “shift-up” to reflect this trend, mirroring the “shift-left” term in which testing is performed earlier in the lifecycle (i.e. moved left on the project timeline). This is consistent with the broader trends of local-first, edge computing, pushing intelligence to the edge, etc.

NIST-compliance: I didn’t quite realise the extent to which “Is your solution NIST compliant?” was a checkbox for regulated industries. It’s not the death knell for non-standard cryptography in regulated industries, but it slows adoption and increases buyer risk. The implications are 1) non-standard, non-NIST-approved crypto solutions (MPC, FHE, ZKP) should avoid regulated industries, and 2) if targeting regulated industries, expect the sales cycle to be slower and conversion to be lower.

Post Quantum Standards: The NIST PQC standardisation process, due to be completed in the next year, will catalyse broader adoption of cryptographic and security tools as CISOs look to rethink their security architecture. For standard and PQC cryptography start-ups, this is “why now”. Less so, for non-standard cryptography companies, because of the NIST compliance point.

Scalpel, not a hammer: The vision behind Confidential’s selective encryption is seductive. Encrypt only sensitive words, paragraphs and documents and leave the rest in plaintext. If you can do this accurately and reliably, you don’t have the computational and cost overhead of encrypting and decrypting everything. This is not a cryptographic breakthrough but the result of open document and extension standards.

Real-time encryption: A shared vision of automatic real-time sensitive element encryption within the application. This “automatic” functionality is a huge opportunity for a private LLM with private cloud training and local inference.

🤙 The Conversation

Q1: Thanks for joining me, Karim. Let’s get oriented: what problem does Confidential solve, and which technologies do you use to solve it?

We are building a back-end platform with multiple front-ends to realise one vision: data-centric security. It’s called data security, not infrastructure or server-level security. Historically, we've protected infrastructure. Our vision is to be the platform to protect unstructured data wherever it lives, wherever it goes, in wherever the front end you're using—one easy-to-use experience to guarantee protection and adoption. And obviously, protection can mean a lot of things. I mean using cryptography to ensure confidentiality, integrity, and authenticity.

Q2: Lovely stuff. All the best words. But how exactly? Which tools do you use?

We're focused on enterprises and regulated industries. So, there is no way around using the standard cryptography approved by NIST (The National Institute of Standards and Technology) if you want to be deployed in production. So today that is public and private key cryptography and digital signatures. We started here to get the platform deployed quickly, but we designed our software with an easy migration path. So when the new PQC standards are finalised in the next year, we can upgrade with minimal additional disruption to the customer. Then, there is a component we call “lightweight (or function-specific) secure computation” in our backend; this is known as distributed or threshold crypto, and its primary use is for protecting the keys in the back-end. I use crypto to mean cryptography, not cryptocurrency.

Q3: What exactly does an easy migration path to post-quantum mean?

It means you use encryption schemes in a black-box manner, so you do not depend on the particulars of any specific algorithm. So, you can replace the elliptic curve-based public key encryption scheme (more likely a key establishment scheme) with the new post-quantum scheme with minimal interference to a user workflow. All upgrades should be performed in the back-end, and the admin can just choose the scheme and its parameters (provided by NIST). As an admin, you should ideally be able to switch to a post-quantum algorithm over a weekend. You won’t need to go and replace an agent on every user’s machine in your enterprise.

Q4: Great so fire-and-forget, but from what we’ve seen, few customers are ready. Customers don’t know these solutions exist, and when they do, they don’t trust them because they struggle to understand how they work. Few customers have cryptographers on staff to help. (Nigel Smart can only do some much consulting, lol)

Sure, some evangelising is still required. People are used to protecting infrastructure, a server or a networking box. So, a mindset shift will be necessary, which is more complicated than a typical SaaS. But the pitch is getting more accessible, and when people get it, they get it. We say we want to bring data protection to its final and natural destination, as close to the sensitive content as possible inside whatever data container it lives in (i.e., PDF, Word Doc, Image …etc). There are a couple of things to note regarding market education. One, our solutions already integrate with existing enterprise platforms like Microsoft Office, and Google Workspace which makes it (in theory) more straightforward for the end user. Two, you work with existing document formats, which makes adoption easier. And on the trust side, it’s lots of different things. It's web-based or an extension or add-in. We use well-known libraries and code; you can also inspect the code since it is JavaScript. We can also integrate with key management software and HSMs like HashiCorp’s vault. We’re also getting certified and having auditors look at the executed code and the key management. All of these make CISOs comfortable. But the broader point is once you move to application-level security, you reduce complexity and attack vectors because the protection travels with the data. So the trust bit becomes a bit more palpable and easier because we say: “We can’t see any of your data in our backend (because it is encrypted client-side), and we don’t have your keys, and you can inspect the executing code, so you don’t have to trust us with much, you can verify all this”.

Q5: Right, so you are doing all the little things right, and you can say “we can’t see your data”, but I've heard this from every startup I’ve spoken with, too. What’s different?

The first question I used to get when speaking to CISOs and CIOs was what encryption are you using? And because the answer was standard NIST-approved encryption like AES and RSA, the conversation continued. It is much easier because these algorithms have been tested and validated by NIST. The customer doesn’t have to do it themselves, in in most cases, they couldn’t even if they wanted to. They rely on NIST to approve something, then they adopt. I started looking at attribute-based encryption (ABE) and in theory and the long term (in 5-7 years), ABE is the way to go. But what do you do till then? There are currently no NIST ABE standards, and as the world moves to PQC, you not only need classically secure ABE, you need efficient quantum-safe ABE; to the best of my knowledge, this is still an active area of research. If my answer was that we utilise advanced non-NIST-standardised cryptography, conversations could continue, but they may get stuck later when you move to production. Ultimately, compliance needs to see a NIST standard to check off a box required in compliance and auditing. We will eventually get standards for advanced cryptography, but not for several years; the PQC process has taken over seven years.

Q6: This is profound. You’re saying enterprises won’t adopt advanced cryptography if it’s not a NIST standard. But we have a bunch of MPC, ZKP, and FHE companies running around trying to sell to regulated industries?

I’ve worked for over a decade in threshold cryptography and MPC and recently in accelerating FHE and computer-aided verification of zero-knowledge proofs. These are the right tools for the long term, no doubt. But put yourself in the shoes of a CISO today or in the next two years. In my experience, I’ve found CISOs to be the gatekeepers for new advanced cryptography deployments. They care about security, but they face budget constraints and compliance pressure, which means ISO, SoC, and other (operational) standards. In every organisation, there is a compliance checklist. The best organisations have processes for experimentation. But there is always a checkbox. And if you can’t tick it, you need an excellent reason. To get deployed and certified for anything related to the federal government, we worked with DARPA and the Air Force; you have to tick the right boxes (and many of them). If you want to be deployed at scale in production in the next 2-3 years in regulated industries or the DoD, it has to be NIST standardised cryptography (and even more, approved by the NSA in its Suite 2.0).

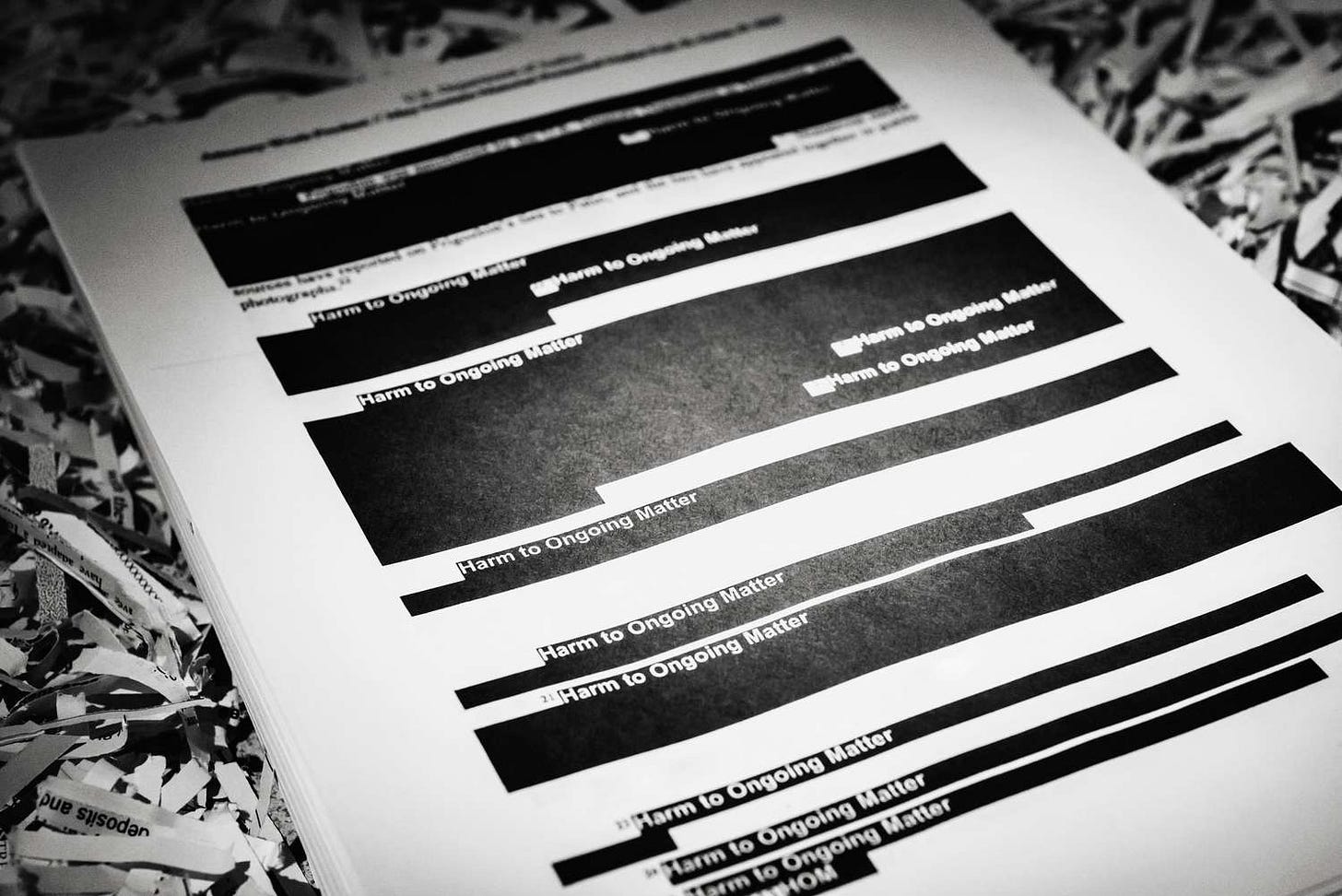

This is not just my opinion; this is the official NSA guidance in " The Commercial National Security Algorithm Suite 2.0 and Quantum Computing FAQ" report here. See page 12, which says , "CNSSP 15 mandates using public standards while allowing exceptions for additional NSA-approved options when needed. Neither the NSA nor NIST has produced standards for these areas, and the NSA has not issued any general approval for using these technologies. Many of these topics involve novel security properties requiring further scrutiny. ... In particular, the following have no generally approved solutions: Distributed ledgers or blockchains, Private information retrieval (PIR), Private set intersection (PSI), Identity-based encryption (IBE), Attribute-based encryption (ABE), Homomorphic encryption (HE), Group signatures, Ring signatures, Searchable encryption."

But I may be biased because I'm not actively deploying non-NIST-standardised schemes. So, there is a chance it’s deployed at a more significant scale. We know from the cryptocurrency world that it is. But for pharma, biotech, life sciences, defence, aerospace, and finance, I can only see a pathw for large-scale production deployment in the next 2-3 years for advanced cryptography with NIST standards. This is a reason to push for NIST standards.

Q7: I want to press you: is selling to healthcare or finance industries with FHE, MPC, and ZKP in 2023 impossible?

Impossible is too strong. Given the state of performance and technical maturity, it may be too slow to be deployed at scale today anyway. So you might want to find smaller non-regulated use cases to experiment. But it’s not controversial to say that anything non-standardized will take longer to be adopted. That’s the reality. You can get the right sign-offs not to tick the checkbox, but it will take longer, and fewer CISOs will take the risk. So, you might look at a sales cycle of longer than 12 months. You might see lots of pilots, but a significant drop off to production. And I think you can forget it for government and defence customers; it’s already hard enough for the standardised 20-year-old cryptography (you have to get a lot of certifications for the libraries and the software itself). For standard cryptography, deployment can be nine months and moving to production easier.

Q8: Fascinating because 2x longer time to production is 2x fewer clients and 2x less revenue. For startups, these are the metrics that count. This is the difference between raising the next round or failing. Okay let’s move on to how users are using the tools. You are integrating with Microsoft Office and other corporate-approved applications. This has always felt like the right way to deploy PETs but why hasn’t it already been done?

Well, it’s more complicated than you might think. In lots of regulated industries, even the app store is blocked. You can’t just download a new extension or add-in, you have to get approval. They have processes by which new applications can be deployed, but this goes through the CISO. So that’s your first problem. You can serve customers through a web interface, which we are doing now, that’s easier, but it introduces friction. You see instances where our tool is a single button in Office. Users love it. So that’s where we want to end up.

There is also the need for more automation to help usability. You take the burden off users by automating a process. Initially, we thought we should start with manual encryption and users signing manually, and we would introduce more automation and more complex workflows over time. Interestingly, the way it's working is the opposite. The automated versions are the ones getting deployed first. When you request a document or some fields, we automatically encrypt in JavaScript, and then you're using viewers and add-ins as a passive viewer. The encryption happens automatically through an ingestion process or a process that generates reports automatically from databases. With that sort of automation, we expect people to get used to seeing add-ons and using them for one-off cases of securing financial documents or internal investigations. It’s like PGP, which can be regarded as a cryptography success story (or not, depending on how you want to look at it). But PGP is now over 20 years old. We need the modern version that is easy to plug and play, can do more, and is usable by the average user. The real usability challenges are not better encryption schemes. It's better key management, encryption policies, key rotation revocation, standard key management and cryptographic material management. Crypto-agility is the keyword that more people will start to be more aware (driven by PQC adoption).

Q9: There is a mindset shift here. You tell customers to forget the server-client security model and focus on keeping the data safe. You are describing a world in which you encrypt the application directly. So, there is less need to worry about server-side infrastructure. Schematically, it’s cleaner, but how do you go in and tell the organisation that?

That’s what it means to have a data-centric security paradigm. We frame the problem around the data, not the device. Because what do the attackers go after? They go after the cheese, which is the data and that data often sits in and is dealt with in applications (and the application layer of the TCP/IP stack). Suppose you look at how we historically developed security. In that case, it's alongside the layer and boundaries of the TCP/IP stack because, historically, networking and distributed systems came from the Internet community. So, the first thing was to secure transmission. And when you look at the more complex OSI stack, you find that there was/is the session layer; that’s where, in textbooks, you are taught Secure Socket Layer (SSL) lives. But the more straightforward TCP/IP stack won, and Transport Layer Security (TLS) now lives in the transport layer. We also have IPsec for security at the network layer. What matters (the data) is most of the time sitting (and dealt with) up there in the application layer, especially now that this data is more dispersed with multi-cloud and distributed settings and working from home. The model of a perimeter is outdated. You don't know where the data will live in the long term or where it may be temporarily hosted, so trying to protect the data by having the same configuration guarantees on different infrastructures and transports is futile. The easiest thing is to push the protection to the application, to the data from the application, and have the ability to decrypt it at the application. Of course, this also poses challenges and imposes limitations.

But, with the proper infrastructure, you can have back-end servers and keys in the policy with threshold decrypt and processing whenever needed. That's precisely what the future should be like. It’s not a new idea; it’s been around since the early 2000s in the form of data-centric networking. Fifteen sixteen years ago, the NSF pushed a lot of money on a future internet architecture program. Much of the edge caching work on Content-Centric Networking (CCN) and Named-Data networking (NDN) came from such programs. But such ideas were at tension with the historical general theme of not wanting to change the network’s core and instead pushing the intelligence out to the edges (the end-to-end argument in system design). Performing such functions is more accessible now because the infrastructure doesn’t matter; you can move it from cloud to cloud with microservices and infrastructure as a code. If you have your whole AWS infrastructure and want to migrate to Azure or GCP next year, you can do that. With very minimal changes, you can achieve this today. You take the data as is and copy it to another cloud if the data is already encrypted. There's no need to decrypt and translate and all this.

Q10: But what about key management and HSM infrastructure? If you go to a customer who is already “crypto-agile”, as they say, what do they need to change? Does Confidential break the workflows?

As with all security, it’s not drag-and-drop, so some configuration is required, but not a vast amount and nothing the security team can’t handle. If you start encrypting content at the data layer or inside the application, there are a lot of indexing functionalities in the back-end and search, for example, that will break. The ideal solution for this is some form of homomorphic encryption. That would be ideal so you don’t have to decrypt and can still do all the indexing and search privately, but that brings the question of what keys you are encrypting to and who has the availability to decrypt. And I don't think people have thought that far ahead yet. I mean, homomorphic encryption, in its current state, is ideal for offloading outsourcing of computation to an untrusted engine (e.g., a public cloud). So we will get there eventually, but as it stands, there are gaps if you want a fully end-to-end confidentiality solution with compute ability between different organisations.

With what we do selectively encrypting the sensitive parts and leaving the rest of the document in the clear, you get a tuneable solution. You can run some indexing and processing on the clear parts, and now you have to process the encrypted elements differently (maybe in a TEE). So you’ve made your job 10x or more easier. And now, you can think through an appropriate key management solution for the sensitive data. You could work with Hashicorp, for example, who use X509 and the standard encryption and signature schemes that we're using. You just replace the code that calls our key server to the APIs of HashiCorp. And they're very similar to the ones used in HSMs, too.

Q11: Explain the tuneable piece in a little more detail. With selective encryption, we’ve moved away from a binary world of plaintext or ciphertext. This seems important because if you only need to process a small amount of ciphertext, advanced encryption techniques like FHE or MPC suddenly become more viable. Why hasn't it been done before?

Honestly, it has nothing to do with cryptography. We had research prototypes five years ago and attempts by several companies ten or more years ago. But four things came together. First, the document formats 15 years ago were closed, making working with PDF, docx, and xlsx challenging. MS Office was a closed document format. Microsoft 10 years ago moved to an open XML-based standard: Open Office XML. The fact that the document formats are now more open means some more libraries and frameworks are easy to work with. This is huge because you must access the document’s encoding and the individual elements. And if you don't have a lot of support, you cannot get to the object level quickly, whether at the page level or the paragraph or sentence level. Second, no well-established frameworks for add-ins/extensions inside applications existed 10-15 years ago. It started in web browsers, and now every platform has extensions. Look at Slack, Zoom, Google Workspace, etc. Extensions go hand-in-hand with open document formats but now have easy-to-work-with frameworks to access the elements. Third the cloud. It’s been a 10-15 year journey, and still, it’s surprising how slow the migration has been. Bill Buchanan says the cloud is still growing at 18% per year. It’s an essential driver for data protection as customers worry about moving their sensitive data. Finally, mobile connectivity has grown with 4G, 5G, and the coming WiFi 6 and 6G/Next G. So, now, if the decryption keys in distributed stores were harder to access. These four factors, open formats, extensions, cloud, and mobile, mean that selective encryption is viable. Additionally, organisations are rethinking their cryptography infrastructure as post-quantum algorithms are standardised. Now is a great time to be selling new data protection tools.

Q12: Can you describe this will look like in 10 years to the average user? I’m in Apple Notes with some live transcription and AI assistant, and is there some sort of automatic encryption bot that is live encrypting sensitive words and details? And I, as a user, don’t know this is happening, and all I know is I can share the document with anyone, anywhere, because behind-the-scenes sensitive data is protected.

Ideally, but it's going to be challenging to realise. It may work for sharing inside the enterprise. But to make it work for personal settings, that's more difficult. This raises some critical questions about who detects the sensitive parts of the data. It will be crucial that I (and Confidencial) don't see your data or content; I don't have the keys. I might have a share of the keys for recovery, reliability and additional security. Still, the whole point is that the processing is happening at the edge on your device, in your browser, and your document. Yes, for the enterprise, I can imagine. Hopefully, sooner than ten years for specific departments like HR, finance, corporate legal, the executive suite and research R&D.

Q13: Good point; who is doing the automatic detection? Is there a data protection model trained on sensitive and non-sensitive data? This seems problematic.

A whole DLP industry (data loss prevention or protection) exists today that already does it. They're running this as boxes or agents. They run on Chromium-based enterprise browsers, like Surf, Menlo Security, and Island browser. You can think of these things as operating systems, as with Chromebooks. So, in these cases, there is a local instance, and you can run the model locally. There will be a lot of exciting work with hybrid architectures for enterprises. I am picturing three tiers: the first tier runs locally on your machine, lightweight, a tier at the enterprise server level and a tier in the cloud. This is where you can start using PETs to design a fully end-to-end private model. I heard Zama is doing some work on this. I read some blog posts about how you would do LLMs in privacy-enhancing ways. It doesn’t seem like an impossible problem, but it likely will take a lot of engineering to allow all this to happen without touching the cloud. Also, you’ll still need the whole front-end infrastructure and the key management we're building. So I can see in the future somebody else providing this, we integrate with it. We are providing our engines that you can deploy locally in your enterprise.

Q14: This back-end and front-end framing is helpful for people. In the workflow I laid out earlier, it’s me as an individual. But what about at the enterprise level? So you have lots of employees making notes and encrypting them. All this data is at some point consolidated in a data store and then a database for querying. Is this a middle layer, and if we’ve encrypted what we need to on the front end, then what does the database, indexing and querying look like? Is there a role for private information retrieval here?

We mostly focus on unstructured data; we are not dealing with databases. That being said, people put documents in databases, and that's orthogonal if the database itself is also encrypting; sure, it won’t hurt because the database will treat the file as one object and encrypt the whole file. So it's like an onion encryption, like our protection is living inside the document close to the content. And then maybe the database puts another layer and perhaps the record level. We should think about it as layers and ask what is the best design for the problem. My objective is to ensure that if you accidentally send documents to the wrong person or if a back-end store gets compromised, you reduce the amount of information leak as much as possible. And there've been a lot of instances where cloud stores are misconfigured, and suddenly, gigabytes of data in the form of documents are leaked. So now, if you have a layer of protection, an additional one, if the first one fails, the second one works.

Q15: Okay, it’s belt and braces. Encrypt everywhere and wherever. Even throw in a trusted execution environment if you want. I have this mental model: we have an X-axis of need and a Y-axis of customer sophistication. And then, I’ve tried to plot application areas and markets. Healthcare wants to share data, but it’s mainly private data. Huge need. However, the market is highly fragmented, and understanding of cryptography is low. Compared to, say, cybersecurity, where yes, there is a need to pool threat data, but not a huge demand driver. However, the buyers are very sophisticated and can choose solutions based on quality. What do you think about market focus and adoption?

It’s not a bad framing. Of course, as a startup, you have to focus on a use case. But cybersecurity is not a use case. Cybersecurity has different use cases with varying degrees of pain and sophistication. Same for healthcare. Pharmaceutical buyers are very sophisticated, for example. But broadly, the process you outline would be a reasonable way of thinking about it. But maybe it’s just that data protection as part of cybersecurity isn't a vertical play. It’s horizontal. When you think of application-level security, we are talking about more cybersecurity than privacy. And every industry needs data security for their applications. And we can do that with a core platform and APIs and SDKs, which are relatively general. So, you de-risk the company because you aren’t betting on one vertical. With this approach, you can serve finance, healthcare, life science, defence, and aerospace relatively scalably.

Q16: Sure, but that’s having your cake and eating it. You say we can be a horizontal platform, the business model dream. You are saying you don’t need to focus on a single vertical because, I don’t know, reasons? Application-level data security, not server data security?

Yes, that’s right. If you’re focused on the back-end server, you have a bunch of infrastructure to orchestrate with. You have to do lots of integrations, and it’s time-consuming, and it’s best to focus on a single vertical to get all that right. But we don’t have to do that. There is some work to integrate with standard key management software and HSM, but that’s a few (standardised) integrations and more accessible because we aren’t doing anything novel in that aspect that will complicate things; we use these interfaces as they are. So we can stay at the application level, secure the data before it moves from the client and then the client plugs it into their existing infrastructure. Microsoft Office is used in healthcare and cybersecurity. So we don’t have to pick. What we care about more are buyer personas, and they are cross-industry. So what applications do CISOs use, and what do the compliance and legal teams need?

Q17: To summarise, you hypothesise that users are happy to trade off a theoretically more secure solution like ABE, MPC, ZKP, or FHE for lower-risk and more convenient solutions. This is close to my conclusions in the collaborative computing papers and the recent trusted execution environment newsletter.

Yes, but I want to clarify: I’m being pragmatic. I want to use advanced crypto whenever possible. Let’s first build the infrastructure to have a presence in the enterprise, and then it will be easier to deploy and extend to more advanced solutions. So in the short term, as in the next 2-3 years, let’s lay the foundation with existing NIST-standardised cryptography so you can quickly adopt it and then deploy the more advanced cryptography in a couple of years once we get some PQC and other standards. If there's a standard for attribute-based encryption (ABE) that can replace existing public key encryption, I’m also happy with that. We have the original paper and granted patents.

Thanks as always, Karim, it was a pleasure. I’ll direct readers to confidencial.io to learn more.

Your gif of the week this week is brought to you by Kevin.