Has the time come to take Mortal Computing seriously?

Why spend eye-watering amount of money to make every chip act the same? There is another way. By Dan Wilkinson

Hello chap and chapetts, today I bring you a guest post from Dan Wilkinson, now of Synopsys, previously of Imagination Technologies and Graphcore.

I’ve been talking to and learning from Dan for over a year now. This note is almost exclusively his work, with a tiny role for me asking questions along the way.

No jokes from me today, Dan is an actual professional (he’s even referenced every claim) and this is great work I’m not going to undermine with silly jokes… I will however offer up a brief TLDR.

TLDR: Chips are expensive to make yes? Making a chip on a 3nm node process can cost up to a billion. A mask set (the stencils) alone can cost $30-50m. We do this because we want to make every chip act exactly the same (so-called fungibility).

Geoff Hinton, he of AI fame, calls this “Immortal Computing”. A new paradigm would be “Mortal Computing”, where we create devices that accept high variability and embrace imperfection rather than fighting it.

Dan frames all our next-gen compute ideas and exotic designs like neuromorphic, photonic, reversible, and probabilistic hardware on this spectrum:

Immortal (what we build today): flawless, deterministic CMOS. GPUs, CPUs

Weakly Immortal: same silicon, but smarter defect-mapping and on-the-fly tuning (think Cerebras wafer-scale), giving 1.5-5× speed/energy gains.

Weakly Mortal: accept bigger variability and let AI controllers juggle temperature, wear and workload—10-100× gains for things like edge-AI chips and robotic adaptation.

Strongly Mortal: brand-new devices (probabilistic, all-optical, reversible) that may even self-repair or replicate—1,000× potential, but years away.

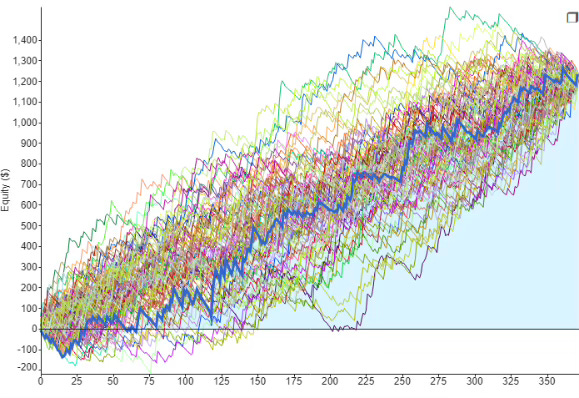

I love this essay because it brings together the entire next-gen computing space into a coherent framework. Dan also created this unbelievably good graphic. It’s explained at the end of the essay.

Read the whole thing below and if you want to speak to the the organ grinder, not the monkey, DM Dan directly.

bye now, lawrence

Has the time come to take Mortal Computing seriously?

Many exotic computing solutions have been proposed, such as optical computing [1], reversible computing [2], computing using the native physics of materials [3], and computing using thermodynamics itself [4]. However, their extended time horizons and risks are problematic for venture investment. At the same time, ventures targeting near-term computing products based on the same CMOS and memory technology in mainstream use today will be very challenged in meaningfully differentiating themselves from those produced by large and powerful incumbents.

Both are compromised from a venture investment perspective- is there a middle way?

Geoff Hinton coined the term Mortal Computing in 2022, speaking of a software program evolved to run on one specific instance of a hardware device, which he suggests would be composed of extremely efficient but not entirely reliable computing elements, and that this might be the only means of being able to realize practical computing devices based on revolutionary device technology. See his presentation on Youtube.

This note explores the concept of Mortal Computing and finds that while the development of radically novel or unreliable computing technology is certainly one application for it, it is also broadly applicable to a spectrum of near-term computing innovation and therefore a candidate for new Deeptech ventures with a wide range of time horizons, strong differentiation against the prevailing computing paradigm, and long-term potential.

Immortal Computing

To properly define Mortal Computing, it is first necessary to define its ancestor, Immortal Computing - which is intentionally shackled by the following two constraints (of which the latter is by far the stronger one).

Deterministic: A given program must produce Identical results with Deterministic Precision and Predictable Performance when run multiple times on the same device.

Fungible: A given program satisfies the Deterministic Precision and Predictable Performance conditions when run on multiple devices of the same type and satisfies just the Deterministic Precision condition when run on different devices with compatible instruction set.

It is immediately apparent that the immortal component is the program and not the hardware - all physical things have finite lifetimes - but software that can be stored indefinitely and run anywhere is truly immortal.

The core design mechanisms of Immortal Computers are:

Zero Defects: The part is offered for sale based on having no user visible defects, achieved via thorough testing and redundant hardware.

Design Margin: Manufacturing and environmental variability is addressed via careful margining at design time plus assumptions/limits on range of variability.

Static Compensation: Variability is further addressed by simple static settings for deployment, such as running fast and energy hungry parts at a lower voltage than slow and lower energy parts.

Simple Automatic Adaptation: Basic control loops adjust just one or two system variables dynamically to keep operation within spec. Dynamic voltage and frequency scaling is a primary example.

These solutions generally outperform the spec sheet under almost all conditions, but this additional performance is not usable or monetised. As transistors shrink and advanced packaging introduces an array of challenges around thermal management, defectivity and cost, this over-margining becomes increasingly onerous. At the very same time, existing systems like large scale super computers [5], advanced robots operating in complex environments [6,7] or products that employ AI using lifetime learning [8] are already facing challenges in maintaining the determinism and fungibility demanded by the Immortal Computing paradigm.

Rather than seeing Hinton's Mortal Computing concept as being limited to development of future novel circuit structures, this article seeks to develop a broader and more widely applicable definition suitable for near term investment.

Weakly Immortal Computing

Some first steps towards Mortal Computing are present in existing mainstream devices, already eroding long-held assumptions about what attributes computers should and should not embody.

Some good examples of existing "Weakly Immortal" device-level computing solutions are:

Advanced Defect Mitigation: as in the Cerebras wafer scale engine [9]

Multi-variable Adaptation: as in high speed wired SERDES and 5G/6G wireless modems [10, 11]

Workload-aware runtime optimisation: as in some multi-processor systems [12]

There remains much near term potential in deepening and extending these mechanisms in contemporary devices, especially by leveraging new expertise and discoveries in AI.

Weakly Immortal designs based on established silicon technology may exhibit individual device performance-cost-energy gains of 1.5X to 5X over Immortal solutions.

Defining an Environment for a computer

Designing a Mortal Computer starts with understanding its environment. There are three main parts to an Immortal computer's environment, from the perspective of the software (drivers, OS, middleware) that operates the computer:

World: Temperature, humidity, power supply and locale, over which the program has little or no influence.

Substrate: The conditions within the computer itself, and within its silicon components, such as local power supply conditions, defectivity and so on. Since we consider the environment as seen by software that manages the computer, the computer hardware is as much part of the environment as the world outside. The program has more, but still limited, capacity to influence these conditions. Modern processors are woefully under-equipped in this area.

Workload: The software operating layer of the computer experiences the varying workload demands placed upon it as part of its environment, to which it must respond, but to which it can also adapt just like it can to its physical environment. Workload awareness has been explored but remains hugely underexploited, partly out of fear of incurring variable performance.

Weakly Mortal Computing

Weakly Immortal mechanisms blur into the Weakly Mortal as engineers are forced to adopt much more complex adaptation and defect mitigation. At some point it becomes easier to change the design mindset from one of aiming for computing Immortality no matter what, to one that aims to make the first workable Mortal Computing devices that knowingly trade determinism and fungibility for more workable and superior performing solutions.

Weakly Immortal designs employing various types of analogue (electrical, optical, neuromorphic) and new memory technology may exhibit 10X to 100X performance-cost-energy gains over Immortal solutions, with a key focus on addressing defectivity.

The investment prospect of Weakly Mortal computing solutions is exciting since it combines:

(a) the near-term technical challenge of extracting a good deal more performance from existing computing technologies by employing more sophisticated (biomimetic) environment/workload adaptation and defect tolerance

(b) identifying commercial models and use cases that can accommodate a wider range of performance variability and/or reduced fungibility

(c) the cultural project of building a new engineering community that dares to move beyond the dogma of engineering perfection and homogeneity at scale.

This new engineering culture will be rewarded with the option of developing and applying innovations covered later that transcend the Immortal and for the first time explore truly Mortal Computing.

Great near- to medium-term Weakly Mortal computers will break new ground in the following areas:

Intelligent Adaptation: Advanced cybernetic/biomimetic control of many variables, assisted by many sensors that monitor world, substrate and workload to keep operation within spec and to outperform when conditions allow. A good example would be fine grained runtime thermodynamic optimisation of a future digital computing device in a mobile device or data centre processor [12, 13]. This is harder than it sounds, which is why it has never been attempted in any commercial device.

Defect Tolerance: Graceful degradation in performance as defect density rises and rises, handling significant lifetime degradation and hostile environments for the first time. Dealing with this requires more than the capabilities offered by the Defect Mitigation mentioned earlier. A good example would be a future neuromorphic processor composed of many stacked silicon dies and its mortal operating system [14], or a humanoid robot's joints and motors aging [7].

Variable Performance: Devices are for the first time sold on the basis that their individual performances may have a high variance (to the upside), combined with use cases and a technology culture that is keen to exploit this [15]. A smart drone swarm where performance per batch rather than per individual is priced is one example [16].

Critical Attributes of Mortal Computing

To succeed in these strategies, designers should consider three fundamental cybernetic requirements that Alexander Ororbia and Karl Friston detail in their pioneering 2023 paper.

Ultrastability: The ability of a system to meaningfully change its internal organization or structure in response to environmental conditions that threaten to disturb a desired behaviour or value of an essential variable. The changes such systems are capable of are qualitative in the sense of changing the mode of interaction with an environment in steps or jumps, not along a continuum, and they are purposeful because such systems seek a behaviour that is disturbance defying. This is strongly related to the Non-Equilibrium Steady State (NESS) concept from the discipline of Non-Equilibrium Thermodynamics (the open-system thermodynamics of living things and complex systems).

Requisite Variety: A controller can only properly control a system if has sufficient optionality in the actions it can take to adapt its own internal configuration, where this variety matches the underlying complexity of the environment. Again, current processors are far from meeting this requirement.

The Good Regulator: A competent controller of a system must possess a generative model of that system and its relevant environment. The term generative here does not imply use of genAI, merely the ability to predict the future state of a system. This controller must represent a minimal overhead on the system.

By now it should be apparent how Mortal Computing solutions distinguish themselves from their Immortal ancestors. To the best of the author's knowledge, no existing solutions meet even two let alone all these criteria for a minimum viable cybernetic solution.

This is Paramecium, a ubiquitous single celled organism. It implements all three of the Critical Attributes of Mortal Computing without having a single neuron [17,18,19]. To produce affordable computing machines that have a wide range of real-world competencies and resilience it is this feat we must understand and replicate as the first order of business.

Strongly Mortal Computing

Buoyed by success with Weakly Mortal computing solutions, attention can finally turn to Strongly Mortal computing. The engineering and scientific community established to deliver Weakly Mortal solutions will now focus on the following new mechanisms:

Intelligent Agency: This extension of Intelligent Adaptation includes meaningful ways for the adaptive system to influence its local environment, which ultimately is a necessary condition for independent mortal systems to persist. The most obvious agency is a robot that can move toward rewarding conditions or away from threat and harm [20]. However, movement is not mandated. A data centre device that can message a higher controller in a data warehouse can at least influence that controller to act to improve the requester's local conditions, such as cooling.

Stochastic Outcomes: Individually unreliable computing elements may as an ensemble, produce results that are reliable and deterministic in a stochastic sense, but which will give different answers each time the program is run. The best example of this are probabilistic computers [21] (see inset below). Another example would be a robot visual cortex implemented with stochastic/approximate computing that must handle trillions of pixels per hour, especially if the higher functions of that machine have less stochasticity [23].

Simple Self Repair: A machine can enact basic self-repair operations for the most common or harmful hardware faults. A robot with manipulators and intelligence that can replace some of its own parts[24,25], or a supercomputer that includes fully robotic maintenance would qualify [26]. While both these cases may seem like cheating since the spare parts are not within the machines capability to produce, the complexity of making even this limited self-repair operation sustainable and stable should not be underestimated.

Advanced Self Repair: A machine that has a wide range of minor and major self-repair capabilities and which can manufacture at least some replacement parts [24, 25].

Self-Replication: A machine that is capable of producing a copy of itself without dying or producing at least two copies before ceasing to exist [27].

Potential examples of Strongly Mortal computing solutions that promise game-changing advantages (1000x and more), yet which are unlikely to be able to fulfil Immortal Computing requirements include:

novel computing such as all-optical[1], reversible[2], in-materio, probabilistic (see below) and thermodynamic [4] computing.

ultra low-cost printed and flexible electronics, deployed at scale [28]

radically new forms of memory [29, 30]

hybrid biochemical and silicon systems [31, 32]

Advanced self-repair and self-replication may well be impossible for systems relying purely on solid-state computation, but hybrids of biochemical and solid-state solutions might - one day in the far future - achieve self-replication.

Probabilistic computers (p-computers)

Probabilistic computers employ 'p-bits' and can generate billions of truly random bits which can be biased to arbitrary probabilities, leveraging intrinsic device physics to deliver billions of hardware entropy sources per device versus the few thousand that can be implemented on today's deterministic computers.

P-computers have wide application in more efficient and capable AI as well as scientific and financial computing. They also represent a near term alternative to quantum computing that holds immediate promise for scaling using existing technology and which is well suited for the class of problems that quantum targets [22]. p-computer implementations will need to apply Mortal Computing approaches to tame device level variability to deliver billions of fair coin flips but also to develop new programming paradigms that deal with the implications of the inability to precisely repeat a previous calculation.

As Richard Feynman observed:

"… the other way to simulate a probabilistic nature, … might still be to simulate the probabilistic nature by a computer C which itself is probabilistic, in which you always randomize the last two digits of every number, or you do something terrible to it. So it becomes what I'll call a probabilistic computer, in which the output is not a unique function of the input."

Timeline

Mortal solutions colonise computing from the margins.

At the high-value, low volume end (right) of the distributions, the value of fungibility diminishes thanks to the small volume while the economic benefit of significantly enhancing the performance of a single very expensive unit is obvious.

At the cheap, high-volume and disposable end of the distribution (left), individual unit failures out of billions are likely to have little or no impact economically or functionally. Fungibility can be expressed as a batch rather than per-unit concept.

The middle of the distribution is likely the most resistant to encroaching mortal solutions, albeit with the immortal solutions increasingly confined to the middle as time passes.

Development of Mortal solutions will rely heavily on digital twins and simulations, and for this purpose and others, the Immortal Computing paradigm will continue to remain important.

Mortal Solution Menagerie

The High Volume, Low value quadrant is especially interesting given the possibility of supporting such solutions with under-explored computing hard tech such as flexible and printed electronics, 3D-printed PCBs and new ways of thinking about defect tolerance.

The Devices quadrant applies Mortal design techniques to individual processors, as opposed to finished systems, especially so when the end systems they go into are significantly challenged by their environment or suffer from issues such as battery life.

The Low Volume, High Value quadrant is particularly relevant for new ways of tackling challenges in defence, biomedicine and super-computing.

The Systems quadrant is already imagining unprecedented levels of autonomy for future factories and robots, thus creating new opportunities for Mortal Computing at the level of both their constituent components and the systems themselves.

If you want to discuss, get in touch lawrence@lunar.vc

References and Further Reading

All-Optical Computer Unveiled With 100 GHz Clock Speed | Discover Magazine

Normal Computing Unveils the First-ever Thermodynamic Computer

Cores that don't count | Proceedings of the Workshop on Hot Topics in Operating Systems

Review of Fault-Tolerant Control Systems Used in Robotic Manipulators

100x Defect Tolerance: How Cerebras Solved the Yield Problem - Cerebras

Evolution Of Equalization Techniques In High-Speed SerDes For Extended Reaches

Deep Learning Workload Scheduling in GPU Datacenters: A Survey

Frontiers | Adaptivity: a path towards general swarm intelligence?

Michael Levin | Cell Intelligence in Physiological and Morphological Spaces

Research reveals even single-cell organisms exhibit habituation, a simple form of learning

Frontiers | Artificial intelligence-based spatio-temporal vision sensors: applications and prospects

Self-healing materials for soft-matter machines and electronics | NPG Asia Materials

Self-replicating hierarchical modular robotic swarms | Communications Engineering

Synaptic and neural behaviours in a standard silicon transistor | Nature

Wild.