I’m Lawrence. a pleasure . I invest in computing hardware. Lunar invests in deep tech. Lots of people JUST LIKE YOU subscribe to this newsletter. These people are so smart and work in [INSERT TOP FUND NAMES]. If you subscribe you will also be smart and loved. If you are trying to make the world better for my children, get in touch please lawrence@lunar.vc.

Workhouse to Worktheatre

Last month, an agent came for me in the night. It was called Deep Research (because SV can’t name stuff, o1 pro mode or o3 mini-high, rotfl). Good agents with bad names are coming for us all. We are the weavers now.

The automation story is as old as time. This time is rarely different from the last time. We all suffer The Tyranny of the Present. Time is a flat cycle, as one of our greatest philosophers (Matthew Mcconaughey) once said. Anyway, what was my point? Oh yeah, that humans are scared of death. And that manifests as finding life in work to avoid thinking about it. The eternal void. If you think about it that way, you shouldn’t just expect people to go quietly into the good night. I’m telling you, there will be no great automation. No matter how good AI gets. And listen, I’m scale-pilled and ready to go. I’m not saying humans will do things better than AI, actually, I’m saying we won’t. I’m saying our political institutions can’t let it happen en mass. We vote for our politicans, mostly. Stop the Steal, etc. And the ones we don’t vote for, need their citizens to have jobs. China, etc.

Today, I want to talk about occupational downgrading. There are three basic stories of what happens if AI gets good fast:

high% growth with mass unemployment = social breakdown. (see: Elysium, In Time, Snowpiercer, The Hunger Games)

high% growth with mass unemployment = new social contract w/ UBI (see: Tomorrowland, Arrival, The Expanse, Star Trek)

high% growth with limited unemployment = performative work (see: Sorry to Bother You, Office Space, Brazil)

Story one is high probabilty, but too bleak to contemplate on a wet Wednesday morning on substack. Story two means believing in fast political and institutional change. And story three is the most optimistic albeit with quiet despair characteristics.

Which story do you choose to believe? And so it goes with God.

Lying with Numbers

I read 60-75 million white-collar jobs globally face immediate disruption from AI. These roles—paralegals, analysts, writers, assistants—form the backbone of knowledge economies. I’ve seen projections of between 800 million and 1 billion jobs worldwide to be automated by 2030, with “40-50% of workplace tasks in developed economies already automatable with current technology.”

They buried the lede, team. “already automatable with current technology” I’ll come back to that later. The standard response to these automation scare stories: "Don't worry, new jobs will emerge." After all, the mechanical loom transformed weavers into factory workers. Computers created entirely new knowledge work categories. Technology, we're reassured, has always created more jobs than it destroyed. We have drone piltos, and VR game designers, and TikTok influencers. Strong points by Dave from the pub.

But this time is different. Soz, but it really is. And we have to face it. Let Us Face the Future. This historical argument, while comforting, fails to recognise several ways AI automation differs from previous technological revolutions:

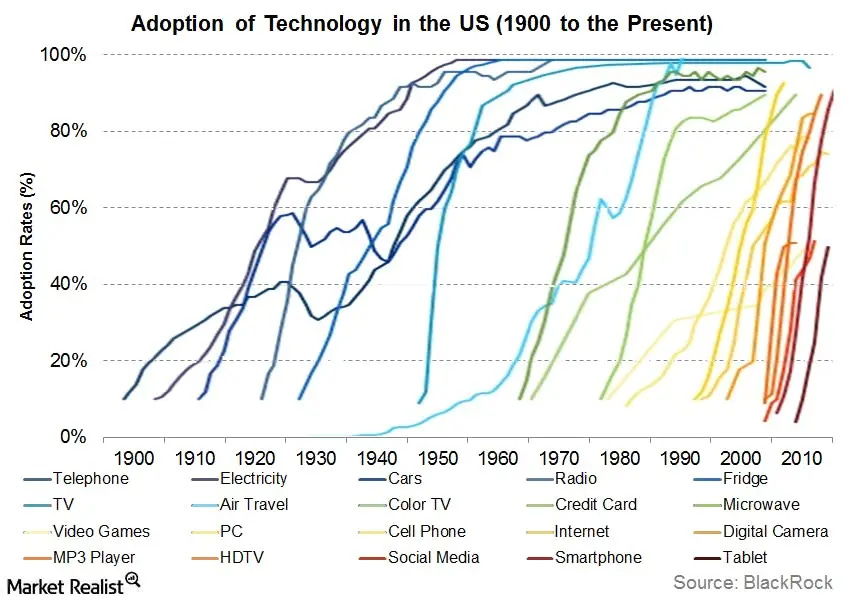

First, speed. Previous transitions played out over generations, allowing for gradual workforce adaptation through natural turnover. A farmer's son could become a factory worker; a factory worker's daughter could become an office worker. AI is compressing what once took generations into mere years. The steam engine took about 50-70 years to “go mainstream”. The automobile took 15 years for 20% adoption in the US.

Second, there's the problem of the abstraction ladder. Each prior technological shift pushed humans "up the ladder" toward more abstract work. Physical labour gave way to routine cognitive work, which is now giving way to... what exactly? If AI can write, analyse, code, and create, what's the next rung on the ladder? Previous transitions had a clear direction; this one doesn't.

Third, there's the end of complementarity. Previous technologies largely augmented human capability, making workers more productive. A person with a spreadsheet was more valuable than a person with a ledger book. But AI increasingly replaces rather than complements. A person with AI is often redundant rather than enhanced. In this case, it’s more productive, until redundant. It’s a threshold.

Finally, there's the retraining mismatch. A 52-year-old accountant with 25 years of experience isn't going to become an AI prompt engineer or a quantum computing researcher. The skill gaps are too wide, the learning curves too steep, and the economic incentives too misaligned to facilitate mass mid-career transitions. I can’t retrain in nuclear engineering. Or NFT creation. That’s lots of years of education. And who’s paying? I have a mortgage. If you are a policymaker or politican thinking about “skills and training” to prepare for AI. You are doing it wrong.

So far so obvious. But what if I told you: McKinsey are getting it wrong. I know, I know, but hear me out. Saying X task can be done more efficiently by an agent, so it will, is basic. That’s not what’s gonna happen. People Do not go gentle into that good night.

We're heading toward something more subtle and perhaps more insidious: mass underemployment disguised as continued employment. You’re welcome.

Bullshit Jobs: AI Theory

David Graeber's "bullshit jobs" framework provides an preview of this future. Graeber categorised meaningless work into five types: flunkies (who make others feel important), goons (who exist because competitors have them), duct tapers (who fix problems that shouldn't exist), box tickers (who allow organisations to claim they're doing something they're not), and taskmasters (who create or assign unnecessary work).

What's remarkable about this framework is how AI will not eliminate these roles but rather reinforce them, stripping away any remaining productive components while leaving the performative shell intact.

By the late 2020s, office jobs in developed countries will be primarily about overseeing AI systems—and even that "oversight" will be largely performative. The daily ritual of work will change subtly:

The morning begins with a team standup where everyone discusses what the AIs are doing. Mid-level managers check dashboards (probably Monday.com (open for sponsorship)) showing work they don't fully understand. More meetings. Yes more. Meetings proliferate because they're the one place where humans still feel necessary. Box-ticking oversight roles expand, as humans are required by regulation or liability concerns to "approve" AI decisions they rarely override. Lawyers, Accountants, Hospital Administrators are probably going to be fine. Well fine economically, anyway. Interestingly, this likely means more in-person days. Remote work is over. You can’t performatively perform your work from the coffee shop (I write this, of course, from a coffee shop).

The flunky role grows as companies need humans to provide a reassuring face for algorithmic processes. "Yes, I personally reviewed your application," says the loan officer. Duct tapers multiply, as humans are needed to smooth over the gaps between AI systems or handle edge cases, but lack the authority to fix systemic issues. I mean, when you say it out loud, this doesn’t *feel* that different from much of what’s happening around the world right? (Sidebar: interestingly, if this scenario is correct, then we are unlikely to move en mass to “autonomous” systems and “cut out the middleman” because society will want a human connection. The Return of the Middle-man bare case for crypto btw)

I predict office culture becomes more intensely social and political precisely because productive output becomes less relevant to advancement. HR departments gain unprecedented influence (sorry @Elon) as cultural fit and interpersonal dynamics become the primary differentiators in a world where technical skill is augmented or replaced by AI. Being the best writer, coder, creative, marketer, designer no longer matters. But do you remember Bob’s children’s names?

Many workers will experience "quiet guilt"—the nagging awareness that they're not really doing much at all. They fill their time with office chat or scrolling on their phones, occasionally rubber-stamping an AI decision they're contractually obliged to review. Their eight-hour workday contains perhaps two hours of genuine engagement. We realised Economic Possibilities for our Grandchildren but we didn’t gain leisure. We gained empty hours in an office? Sorry Keynes, people are weird.

The world isn’t destined for mass unemployment. It's occupational downgrading and professional hollowing disguised as normal work.

The New Scarcities

Okay, so everything is sad. But the real quiz is who wins in this sad world? Answers in the comments please, here are a few of mine:

Legitimate authority will become infinitely more valuable than formal authority. In a world where anyone can direct AI to produce “work” products, the ability to command genuine respect and attention from other humans will be the true currency of organisational power. This isn't just charisma or interpersonal skills, but a deeper form of earned credibility that allows someone to influence decisions when algorithms cannot provide clear answers. This won’t be a meritocracy based on the “best performers” remember the AIs are the best performers. The UK’s class system maybe industructive here. We have a thousand of subtle markers of status that I’m oblivious to:

“He used the medium-sized spoon for his soup lol, he’s not one of us, don’t invite him to the library after dinner to decide if we do the merger”

These people aren’t playing Cluedo. These are British elites making decisions. Stop laughing at the back. This sort of class system based on if you were on the winning side of a civil war 350 years ago is coming for you too.

Relatedly, social navigation will take center stage as workplaces become primarily social rather than productive spaces. The capacity to build authentic relationships amid increasingly performative environments will distinguish those who thrive from those who survive. This goes beyond networking or politics. The ability to create meaning through human connection. The most valued employees will be those who can transform hollow meetings into genuine exchanges, and who can maintain psychological safety in environments where everyone quietly fears their own obsolescence. As substantive work goes awaty, the ability to create communities of genuine support and collaborative meaning-making becomes not just a nice-to-have but an essential survival skill.

Lastly, following on from last week, wisdom and taste will emerge as the last frontier of human advantage. While AI excels at pattern recognition, optimisation, and even creativity within defined parameters, it cannot replicate the ineffable human capacity for judgment based on experience, values, and aesthetic sensibility. The discernment to know when efficiency isn't the point, when an elegant solution matters more than an optimal one, or when human concerns should override algorithmic recommendations will become invaluable. This isn't mere contrarianism but a refined capacity to recognise what matters beyond what can be quantified. Those who can articulate why a technically perfect solution *feels* wrong, or why an imperfect solution resonates deeply, will provide value that no algorithm can replicate. In a world of AI-generated abundance, the ability to distinguish what is worth paying attention to becomes the ultimate scarce resource.

UBI with Full-Employment Features

This vision of the future challenges a central assumption in discussions about Universal Basic Income (UBI). The conventional wisdom suggests that as AI eliminates jobs, we'll need UBI to support the unemployed masses. But what if widespread unemployment never materializes?

The more disturbing possibility is that humans need work—not just income—for psychological wellbeing. Idle hands etc. Organisations might maintain human employees partly because humans need to work, not just because firms need humans. People crave purpose, identity, social connection, and structure that meaningful work provides.

I think we will maintain economically unnecessary jobs because the alternative—acknowledging that humans have no use, (as traditionally defined by “the labour we can provide”) is too psychologically and socially disruptive. UBI solves an economic problem that might never arise while failing to address the deeper psychological and social problem that almost certainly will: the need for meaning in a world where productive contribution is no longer tied to economic necessity.

Occupational Downgrading

This transformation poses profound challenges not just economically but psychologically and socially. The quiet despair of performative work may prove more insidious than outright unemployment precisely because it lacks visibility and urgency.

The data supports this direction. Despite accelerating AI capabilities, unemployment rates remain historically low while productivity metrics show puzzling patterns. This disconnect between productivity and employment will likely widen as AI advances further.

The automation paradox thus reveals itself: AI won't create a jobless future, but rather a future where jobs persist without the substance that made them meaningful. The challenge isn't finding new jobs, but finding new meaning in a world where productive work is increasingly handled by machines.

For meat-people, several paths emerge: developing judgment that transcends algorithms, building authentic communities outside performance metrics, actively shaping how AI is deployed rather than passively accepting it, and cultivating identity and purpose beyond traditional work.

For policymakers, the challenge is acute. Without the crisis of mass unemployment to motivate action, we risk a slow-motion collapse of meaning that remains statistically invisible. New metrics beyond employment rates will be needed – measures that capture engagement, purpose, and wellbeing. Gross Happiness Index anyone? Can anyone seriously see this happening as the Far Right and Populists gain the ascendency? Colour me skeptical, sadly.

The real danger isn't a jobless future. It's a future where we maintain the theater of employment while emptying it of purpose, trapping millions in performative roles that satisfy an outdated economic model failing to nourish the human spirit.

Addressing this requires not just technological adaptation but a fundamental reconsideration of the relationship between work, worth, and meaning in an age where human productivity may no longer be economically necessary, but human purpose remains existentially essential.

Oh also did you know you can pledge on substack? Last week a lovely man called Guneet Banga pledged £100/year. Thanks man, I feel valuable now.

Also, for God’s sake share this with your friends. Also go back and find the Life of Pi easter egg.

Byeeeee

This hits hard 😢…I’m a 55 yr old accountant

Thanks I hate it